Picture a pharma company using GPT-4 to read through tons of scientific articles, speeding up drug discovery. They soon realize it's not all smooth sailing. Customizing the model, managing resources, and keeping sensitive data secure becomes a challenge.

Thus, the need for a systematic approach to managing large language models in a business context.

This is where LLMOps, or Large Language Model Operations, comes into play. As the capabilities of language models expand, managing, deploying, and maintaining them becomes increasingly crucial for businesses across industries.

LLMOps is a fancy way of saying that it deals with the management and deployment of large language models like GPT-4. These models are powerful AI tools, and LLMOps helps make them more accessible and usable. LLMOps' older sibling is the more famous MLOps. It focuses on managing machine learning models and workflows. While LLMOps and MLOps share some similarities, LLMOps specifically targets the challenges tied to large language models.

While LLMOps is getting attention only recently, the buzz around Generative AI is huge. Taking a small detour to get some things in order before getting into the piece, what is Generative AI and what are LLMs.Generative AI refers to algorithms that create new content or data, while large language models (LLMs) are a specific type of generative AI that focus on understanding and producing human language. LLMs use vast amounts of text data to learn patterns, making them capable of generating coherent and contextually relevant responses. LLMs are a powerful and sophisticated subset of generative AI techniques, specifically designed for language processing tasks.

Imagine a case where a truck manufacturing company decides to implement a large language model. How should the company, or any enterprise go about it?

First, identify specific areas where an LLM could provide value, such as customer support, technical documentation, predictive maintenance, or sales and marketing.

Gather relevant data, such as historical customer support interactions, technical manuals, maintenance records, and sales data. Preprocess this data to ensure it's clean and well-structured, removing any sensitive or personally identifiable information.

Fine-tune a pre-trained LLM like GPT-4 on the collected data to tailor the model to the company’s specific domain and use cases. This will help the model better understand the context and vocabulary related to truck manufacturing.

Integrate the fine-tuned LLM into the company’s systems, such as customer support chatbots, technical documentation generation tools, or predictive maintenance platforms.

Continuously monitor the LLM's performance, and gather feedback from users to ensure it meets their needs. Periodically retrain the model with new data to keep it up-to-date and improve its performance.

Integrating an LLM to an enterprise company has its benefits, but there are larger, very relevant questions.

Humanloop is a hot company in the world of LLMOps. It helps you build GPT-4 apps and fine-tune models. Here’s its CEO Raza Habib talking to Y Combinator on how Large Language Models can enhance businesses.

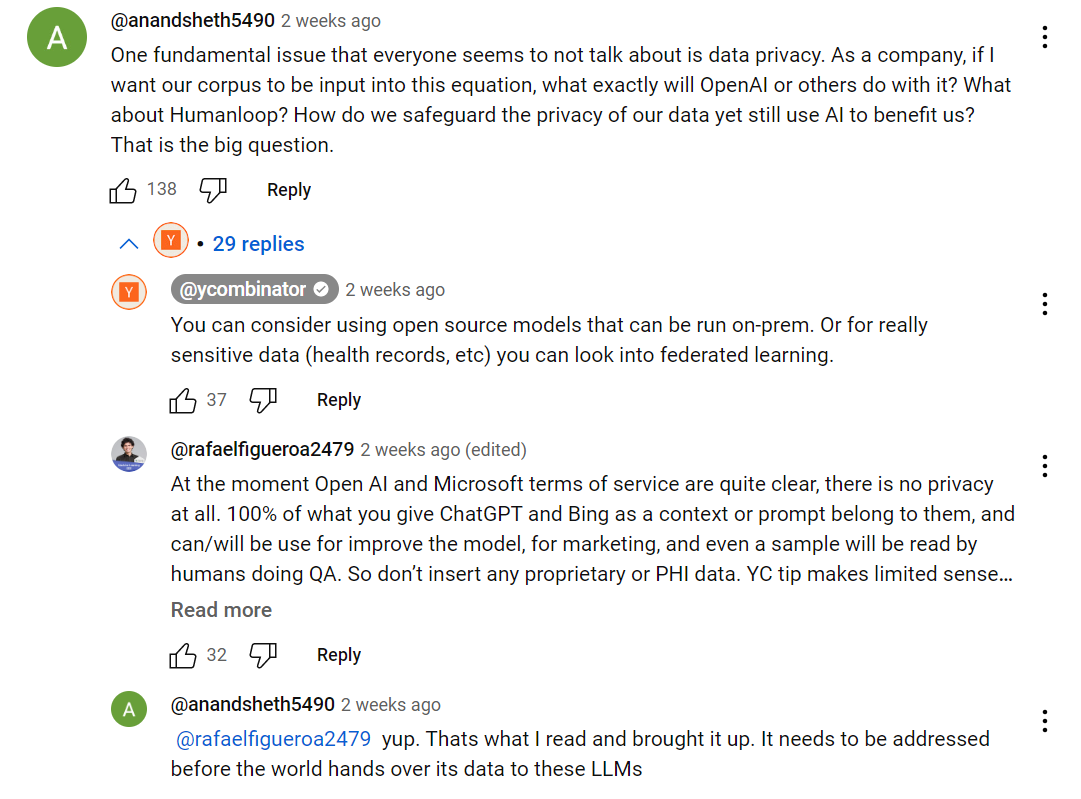

The episode in itself is very informative, but some comments below got my attention. Take this conversation for example. ‘What happens to the data that is used to train these models? What about privacy?”

In this context, what are the potential risks that any company can run into when implementing an LLM?

LLMs can inadvertently learn and propagate biases present in their training data. Companies should ensure the model does not exhibit biased behavior towards certain groups, as this could lead to negative customer experiences or unfair treatment.

Firms should implement robust security measures to protect the LLM from unauthorized access or misuse. This includes securing APIs, controlling access to the model, and monitoring usage patterns for potential threats.

Ensuring the privacy of customer and employee data is crucial. They should use techniques like data anonymization and differential privacy when processing data for the LLM to protect sensitive information.

LLMs can sometimes generate inappropriate or harmful content. Companies should implement mechanisms to filter or moderate the model's output to prevent negative consequences.

Firms should ensure the LLM does not infringe on any copyrights or patents when generating content or making predictions.

Legal and regulatory compliance: Companies should stay informed about relevant laws and regulations related to AI and language models, ensuring their LLM complies with all applicable requirements.

A lot of my research and understanding about LLMOps were a result of my interactions with GPT-4 itself. Here’s what it told me around security.

My prompt: When you mean security. Do you mean that GPT-4 can take private information and misuse it?

GPT-4: “When I mention security, I am referring to the potential for unauthorized access to or misuse of the deployed GPT-4 model and its associated data. While the GPT-4 model itself does not have intentions or the ability to misuse information, bad actors could try to exploit the model or the system it's deployed in to gain access to sensitive information.

For example, if an attacker gains unauthorized access to GPT-4 implementation, they could potentially use the model to generate sensitive information, such as trade secrets, by providing inputs that prompt the model to reveal that information. This risk could arise if the fine-tuned model was trained on confidential data and inadvertently memorized some of it.

To mitigate such security risks, they should:

Secure the deployment: Implement strong authentication and access control mechanisms to prevent unauthorized users from accessing the GPT-4 model.

Monitor usage: Regularly monitor the usage patterns of the GPT-4 model to identify any unusual or suspicious activity, which could indicate unauthorized access or misuse.

Data handling: Be cautious when using sensitive data for fine-tuning the model. Remove any sensitive or personally identifiable information from the training data, and consider using techniques like differential privacy to protect the privacy of the data.

Limit information leakage: Implement mechanisms to limit the model's ability to reveal sensitive information, such as restricting the model's response length or adding constraints to its output.

Regular updates and patches: Keep the system and software up-to-date to minimize vulnerabilities that could be exploited by attackers.

By taking these precautions, the company can minimize the risks associated with deploying a GPT-4 model while still benefiting from its capabilities.”

Our thoughts: More and more people would direct resources and money into solving these problems.

In fact, enterprise AI tools like Writer already is solving some of these issues. “What you write will never make it into our machine learning models. Your data is analyzed transiently, so what you write in your online tools stays there.” Most of their case studies talk about how enterprise companies, especially in cyber security and healthcare, were worried about data privacy.

This is why staying ahead of the curve is more critical than ever.

At Cyces, we're committed to staying up-to-date with the latest developments in large language models and LLMOps, ensuring that our clients can confidently navigate this change.

Whether you're curious about the possibilities or eager to experiment with LLMOps, we invite you to get in touch with us to explore how we can work together.

Read more: Digital-first strategy is more relevant now than ever

leveraging tech for

business growth

Cyces.